Big Data is a popular buzzword but when it's actually used in everyday tasks, practical questions about data governance, no different from those being asked of other data storage technologies, arise.

When you read about a new technology it can often sound like the answer to everything. But then mundane reality comes along and things are seen to be far less flashy and much more messy than they first appeared. This is true too for Hadoop-related technologies (a.k.a. Big Data) which are in their core nothing more than a file system where you can store a really large number of zeroes and ones.

Imagine the following situation in your department: you receive an announcement from your IT guy - "Dear colleagues, from now on feel free to upload anything onto our shared drive, the cost of storage is close to nothing so knock yourselves out". The data-loving crowd would literally dump anything they could lay their hands on in there, following the simple reasoning, "What if this data set is useful one day".

Sanity check - can you, right now, find or discover any useful data on your current shared drives? And do you think you'd be able to if the shared drive size wasn't measured in terabytes, but petabytes or even exabytes (1 billion gigabytes)?

Storing data in traditional relational databases you need to define tables, columns and the relationships between them. You don't need to bother with such details in Big Data technologies. Every user can do that for their own context or doesn't need to do it at all. The result is far less structure and therefore far less descriptive information about data sets (re: metadata). So metadata management, security and governance are in fact becoming more challenging than they used to be with the good old-fashioned relational databases.

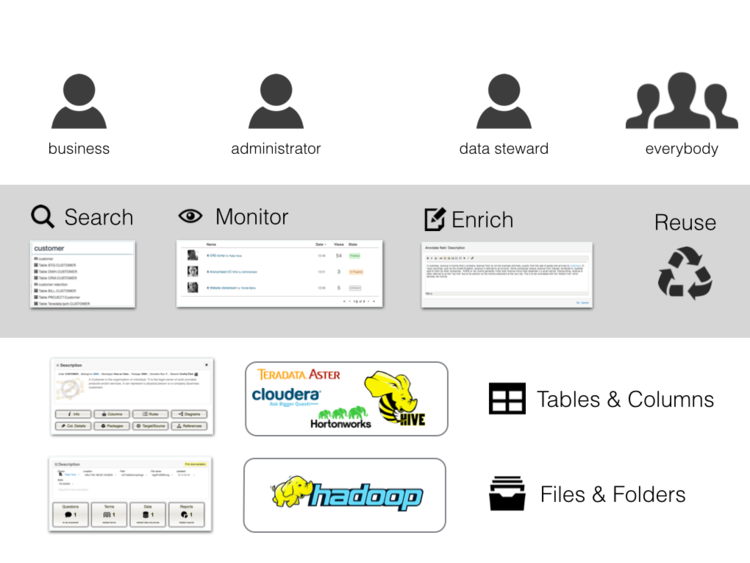

As a vendor of a data governance support tool we love to address this type of a challenge. When you strip down all the hype and focus on the core of the problem - Big Data (Hadoop and derived platforms like Cloudier, Hortwonworks, Teradata Aster …) are no different than any other data storage platform. Just with less metadata. So this is how to deal with it:

Using a Hadoop API we extract the information that's available about the stored content - file names, folders, timestamps, user names … and publish it in an easy-to-search and understandable wiki-style format so users can work with it in everyday use cases like the following:

Information worker

• discovery - when you have a question you can browse through the catalogues of existing data to discover where the answers are.

• request - a user can request access to or extracts from existing data-sets, re-using outputs.

Data steward

• governance - documenting data-sets, establishing ownership of them and linking them to other information assets is easy.

• data quality - stewards can assign data quality labels to data-sets in order to avoid the accidental misuse of data.

Hadoop Administrator

• monitoring - the administrator can keep a note of new folders / files and check that the minimum data-set descriptions were filed - if not, he/she identifies the user and can request additional info.

• impact analysis - it's important and easy to check if a data-set is used by any report or project before it is purged.

The documentation of a data-set stored in HDFS can be displayed in your DG support tool including all relationships to other information assets.

Big data is becoming an integral part of the information landscape of today's enterprise environment. You might be using new prefixes to enumerate the storage size, but everyday tasks of data governance are no different to those with any other data storage platform.

Peter Hora is Co-Founder at Semanta.

Note: this blog was published earlier on the Semanta website on August 24, 2015.

Semanta wordt in Nederland op de markt gebracht door IntoDQ.

27 t/m 29 oktober 2025Praktische driedaagse workshop met internationaal gerenommeerde trainer Lawrence Corr over het modelleren Datawarehouse / BI systemen op basis van dimensioneel modelleren. De workshop wordt ondersteund met vele oefeningen en pra...

29 en 30 oktober 2025 Deze 2-daagse cursus is ontworpen om dataprofessionals te voorzien van de kennis en praktische vaardigheden die nodig zijn om Knowledge Graphs en Large Language Models (LLM's) te integreren in hun workflows voor datamodel...

3 t/m 5 november 2025Praktische workshop met internationaal gerenommeerde spreker Alec Sharp over het modelleren met Entity-Relationship vanuit business perspectief. De workshop wordt ondersteund met praktijkvoorbeelden en duidelijke, herbruikbare ri...

11 en 12 november 2025 Organisaties hebben behoefte aan data science, selfservice BI, embedded BI, edge analytics en klantgedreven BI. Vaak is het dan ook tijd voor een nieuwe, toekomstbestendige data-architectuur. Dit tweedaagse seminar geeft antwoo...

17 t/m 19 november 2025 De DAMA DMBoK2 beschrijft 11 disciplines van Data Management, waarbij Data Governance centraal staat. De Certified Data Management Professional (CDMP) certificatie biedt een traject voor het inleidende niveau (Associate) tot...

25 en 26 november 2025 Worstelt u met de implementatie van data governance of de afstemming tussen teams? Deze baanbrekende workshop introduceert de Data Governance Sprint - een efficiënte, gestructureerde aanpak om uw initiatieven op het...

26 november 2025 Workshop met BPM-specialist Christian Gijsels over AI-Gedreven Business Analyse met ChatGPT. Kunstmatige Intelligentie, ongetwijfeld een van de meest baanbrekende technologieën tot nu toe, opent nieuwe deuren voor analisten met ...

8 t/m 10 juni 2026Praktische driedaagse workshop met internationaal gerenommeerde spreker Alec Sharp over herkennen, beschrijven en ontwerpen van business processen. De workshop wordt ondersteund met praktijkvoorbeelden en duidelijke, herbruikbare ri...

Deel dit bericht