In the first three articles in this series on AI and Ethics we have focused on the emergence of artificial intelligence, the central place it is taking today, the different factors that organizations need to address in order to build their flexible AI muscle, and AI patterns that have been successfully applied so far. In this fourth and last article in the series we want to show how AI applications and patterns can be applied in an ethical manner, so that the benefits of AI can be used without risking violating the ethical foundations we all agree upon.

Ethics is based on well-founded standards of right and wrong that prescribe what humans ought to do. Adopting an ethical approach to the development and use of AI works to ensure organisations, leaders, and developers are aware of the potential dangers of AI, and, by integrating ethical principles into the design, development, and deployment of AI, seek to avoid any potential harm. [1]

Dilemma

In the Global Expansion of AI Surveillance report Steven Feldstein [2] mentions that AI technology used in surveillance by governments is growing at a much faster rate than experts initially thought: “at least seventy-five out of 176 countries globally are actively using AI technologies for surveillance purposes.” Notably mass surveillance and even repression are clear signs of AI being used for the dark side. More and more organizations, like Microsoft and IBM, are raising concerns on AI-powered surveillance. This is where facial recognition can be (mis)used. Racial profiling can be an unintended result, which is a clear violation of human rights.

And during this age of AI, can you still believe what you are seeing? Where individuals on social media are actively being influenced by advanced user profiling and micro-targeted with specific (political) messages to influence their behavior. The role played by Cambridge Analytica in the Trump campaign of 2016 is a well-documented example of how AI can be used to nudge people into a direction without them being aware of being manipulated. Or where deep learning models are used to create fake content (deepfakes, like the example with President Obama). [3]

A customer survey on AI and ethics conducted by Capgemini Research Institute in 2019 sheds some insight on the dilemmas that people are struggling with:

AI algorithms are processing data originally collected for other purposes

• Collecting and processing data without consent

• Reliance on machine-led decision without explanation how the decision was derived at

• Use of (sensitive) personal data

• Biased and unclear recommendations from an AI-based system

• Use of facial recognition technologies by police forces for mass surveillance

The points mentioned above can be summarized as challenges in the transparency, explainability and auditability of the use of AI and the decisions derived by the process.

In research that was conducted in July 2020 [4] it was found that just over half of customers claimed to have daily AI-enabled interactions with organizations, including chatbots, digital assistants, facial recognition, and biometric scanners, which is a significant increase from the 21% in 2018. More importantly, the increase in interactions and familiarity seems to coincide with customers’ willingness to trust this interaction. That is, as long as it is understood how decisions were derived at and are not a black box decision coming out of nowhere.

The Paramount Principle

Above all other principles around AI stands the paramount principle: AI should (only) be designed for human benefit and in no way cause any harm to humans.

Principles for Ethical AI

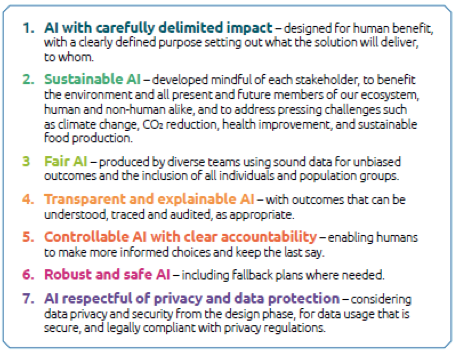

In a whitepaper published by Capgemini earlier this year, Ethical AI [5] has been decoded in 7 principles that apply to any application of AI:

The theme of Ethical AI is – rightfully – getting more and more attention as the (potential) impact of ungoverned AI is becoming more clear by the day. Responsible machine learning is moving quickly up the ladder of priorities. Like at the Ethical ML Network, [6] which works with 8 principles, that looks somewhat similar to the oath of Hippocrates used by doctors around the world, do no harm.

Legislation in the making

The European Commission appointed a High-Level Expert Group on Artificial Intelligence back in 2019 to provide advice on the strategy to follow on artificial intelligence. The first deliverable was the Ethics Guidelines for Trustworthy AI [7] – which outlines the legal, ethical and robustness characteristics of AI. Then in 2020 the expert group published the Assessment List for Trustworthy Artificial Intelligence (ALTAI) for self-assessment. The document provides valuable questions and pointers around the 7 requirements defined in the Ethics Guidelines.

On April 21, 2021 the European Commission proposed the first ever legal framework on AI. It applies to providers, users, importers and distributors of AI applications inside the EU. It is expected that the implementation and ratifications process could take as long as 2-3 years.

In the United States the ethical (and legal) aspects of AI are also being discussed more and more. For example the US Congress has already proposed legislation like the Algorithmic Accountability Act [8] of 2019, which “requires specified commercial entities to conduct assessments of high-risk systems that involve personal information or make automated decisions, such as systems that use artificial intelligence or machine learning”. Furthermore there are government institutions like the National Security Commission on Artificial Intelligence and the Joint Artificial Intelligence Center looking into AI. Also US Federal Trade Commission (FTC) called early 2020 for transparent AI [9], stating that when an AI-enabled system makes an adverse decision (like declining credit for a customer), then the organization should show the affected consumer the key data points used in arriving at the decision and give them the right to change any incorrect information.

Nevertheless all organizations that deal with AI should already now focus on executing the self- assessment and implementing additional measures wherever this is required. This is not an area where you want to negatively stand out. And yes, AI is a powerful catalyst for innovation, but you need to ensure this innovation is done within the guardrails of ethics, fairness and non-bias.

The philosophical angle

Tony Fish, visiting lecturer for AI and Ethics at LSE, said, “if we teach our kids morality then why not teach the machines?”

In general every technology we create has the intent to solve problems for humans. On the other side new technology is also applied against humans. Should this mean that we should stop innovating technology? Or should we have a fundamental discussion about the morality that should apply to innovating with AI? What are the outcomes that we want from AI? How would the intended use of AI make us feel if we were the subject of it?

Should we not make public everything that is contained in the pandora’s box of AI? So that others can create an opinion on how well the AI algorithm adheres to the ethics guidelines and to validate that it works fair and without bias. Maybe we can even have AI algorithms to validate how ethical AI algorithms are?

Finally

This concludes our series our articles around AI. We stand with the title of the series of articles; AI is a powerful concept - a future without it is incomprehensible - but it comes with great responsibilities. Even if we have inspired only one organization to take all the aspects of AI more seriously, we have accomplished our goal.

1. Ethical AI Advisory (Ethicalai.ai)

2. https://carnegieendowment.org/2019/09/17/global-expansion-of-ai-surveillance-pub-79847

3. https://youtu.be/cQ54GDm1eL0

4. Capgemini Research Institute, ‘The art of customer-centric artificial intelligence’, July 2020.

5. https://www.capgemini.com/2021/04/ethical-ai-decoded-in-7-prin ciples/

6. https://ethical.institute/principles.html

7. https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai

8. https://www.congress.gov/bill/116th-congress/house-bill/2231

9. https://www.ftc.gov/news-events/blogs/business-blog/2020/04/using-artificial-intelligence-algorithms

This blog has been co-authored with Erwin Vorwerk.

29 en 30 oktober 2025 Deze 2-daagse cursus is ontworpen om dataprofessionals te voorzien van de kennis en praktische vaardigheden die nodig zijn om Knowledge Graphs en Large Language Models (LLM's) te integreren in hun workflows voor datamodel...

3 t/m 5 november 2025Praktische workshop met internationaal gerenommeerde spreker Alec Sharp over het modelleren met Entity-Relationship vanuit business perspectief. De workshop wordt ondersteund met praktijkvoorbeelden en duidelijke, herbruikbare ri...

17 t/m 19 november 2025 De DAMA DMBoK2 beschrijft 11 disciplines van Data Management, waarbij Data Governance centraal staat. De Certified Data Management Professional (CDMP) certificatie biedt een traject voor het inleidende niveau (Associate) tot...

25 en 26 november 2025 Worstelt u met de implementatie van data governance of de afstemming tussen teams? Deze baanbrekende workshop introduceert de Data Governance Sprint - een efficiënte, gestructureerde aanpak om uw initiatieven op het...

26 november 2025 Workshop met BPM-specialist Christian Gijsels over AI-Gedreven Business Analyse met ChatGPT. Kunstmatige Intelligentie, ongetwijfeld een van de meest baanbrekende technologieën tot nu toe, opent nieuwe deuren voor analisten met ...

8 t/m 10 juni 2026Praktische driedaagse workshop met internationaal gerenommeerde spreker Alec Sharp over herkennen, beschrijven en ontwerpen van business processen. De workshop wordt ondersteund met praktijkvoorbeelden en duidelijke, herbruikbare ri...

Alleen als In-house beschikbaarWorkshop met BPM-specialist Christian Gijsels over business analyse, modelleren en simuleren met de nieuwste release van Sparx Systems' Enterprise Architect, versie 16.Intensieve cursus waarin de belangrijkste basisfunc...

Deel dit bericht